Learn what artificial intelligence is, how it works, how it is trained, and how it is used in various industries. Discover the different types of AI, and focus on the basics of neural networks as the modern approach to AI.

What is Artificial Intelligence?

Artificial Intelligence, or AI, is all about making computers or machines think and learn like us humans. Imagine having a robot buddy that can play games, chat, or even help you make dinner. AI is what makes all of this possible! No other technology has come even near to making computers more life-like.

There are two main types of AI: narrow AI and general AI.

Narrow AI is like a smart helper that’s really good at specific tasks, like recommending songs you might like, or helping your phone’s camera take better pictures, or detecting incoming cars, or driving inside a highway lane. It’s like having a tool that’s amazing at doing one single thing great, but not so great at everything (or anything) else.

Here are some examples of narrow AI that you might be familiar with:

- Siri, Alexa, and Google Assistant: These voice assistants help you with simple tasks like setting reminders, playing music, and answering questions.

- Email spam filters: They learn to recognize and filter out junk emails from your inbox.

- Self-driving cars: They use AI to understand the roads, follow traffic rules, and avoid collisions.

- Face recognition: Social media platforms like Facebook use AI to identify and tag people in photos.

- Product recommendations: Online stores like Amazon suggest products you might like based on your browsing and purchase history.

General AI, on the other hand, is like a super-smart friend who’s good at many different things. Fortunately – or unfortunately, depends on how you look at the topic – general AI doesn’t quite exist yet. It’s a goal that scientists and researchers are working towards. General AI would be able to understand, learn, and adapt to a wide range of tasks, just like humans.

While we’ve made a lot of progress with AI, we haven’t reached the point where a single AI can be a master of many different things like humans are.

Is Chat GPT a type of general AI?

ChatGPT is actually a type of narrow AI, even though it might seem quite advanced. It’s designed to understand and generate human-like text, and it’s really good at that one task. However, it’s not able to perform a wide range of tasks like a general AI would be able to.

Don’t get us wrong, Chat GPT is still an incredibly impressive and powerful technology that has many exciting applications, from improving language translation to helping us better understand the complexities of human communication.

It is also worth mentioning that some researchers think they observed sparks of real intelligence when conversing with Chat GPT:

If you are interested in learning more about general Artificial Intelligence, or just the idea of an AI awakening, HBO’s Westworld is an incredible TV show to bing watch. Just be aware that it paints a pretty bleak future of humans living with AI, and it should be watched as a fantasy, rather than futurology.

How does Artificial Intelligence work?

Let’s break down how artificial intelligence (AI) works in a simple way. The key to AI is making computers learn and think like humans. We do this mainly through a process called machine learning.

Machine learning

Machine learning allows computers to learn on their own by analyzing vast amounts of data, identifying patterns within that data, and creating relationships based on the data.

Imagine your are trying to teach a kid how to ride a bike. How would you go about that task? You would probably show them a bike, explain how to sit and pedal, mount training wheels, and let them try. As they learn to ride, you guide them, let them fail, correct their posture, their pedal style, show them examples of some things they can expect, and give them support while doing it. Machine learning is similar, but for computers.

Traditionally, computers are good at executing instructions written by humans – humans write code, computers take and execute that code. However, this does not allow for much flexibility, as the code written in a traditional way is limited by its set of rules and instructions.

In machine learning, the rules and instructions are learned through the process of learning, rather than being explicitly written beforehand.

In traditional programming, to make a computer ride a bike, you would program instructions for every step of the process. In machine learning, you teach your model to do every step of the process, rather than hardcoding it. This is much slower than traditional programming, but once it is done, your model has the capability to learn how to ride anything that resembels a bile.

Here’s a step-by-step breakdown of how machine learning works:

- We collect data that our AI will learn from: Just like we learn from experiences, AI needs lots of examples (data) to learn from. This can be anything, like pictures of cats, movie reviews, or temperature readings.

- We preprocess the data so it is organized and labeled: We clean up and organize the data, so it’s easier for the AI to learn from it. This is extremely important and a key step in building any successful AI.

- Choose a learning model: Now we come to the brains of the whole thing. A model is like a recipe that the AI will follow to learn from the data, and picking the right one for the job we want to do is key. Some types of models that we can pick from are linear regression, logistic regression, neural networks, deep learning, reinforcement learning. Don’t worry if you don’t know what any of this means, we’ll get to it soon.

- Train the model: We feed the data to the model, and it starts learning patterns and relationships within the data. It’s like practicing a skill over and over, but at a rate that only computers can achieve.

- Test the model: After the AI has learned from the data, we test it to see how well it can make predictions or decisions based on what it learned. This is like giving a quiz to a student.

- Fine-tune the model: If the AI doesn’t do so well on the test, we tweak the model to help it learn better and test it again. It’s like studying more and retaking a test to get a better score.

- Use the AI: Once the AI is good at its task, we can use it to do cool things like recommend music, help cars drive themselves, or chat with people.

Learning models in machine learning

We mentioned that machine learning can use different models to do the actual “learning” part of the task. Here’s a quick overview of some popular models, but do be aware that this is a simplistic look at the whole thing:

- Linear Regression: This is a simple model used to predict a number based on some input features. It’s like trying to guess someone’s age based on their height and weight.

- Logistic Regression: Similar to linear regression, but used for classification tasks, like figuring out if an email is spam or not spam.

- Neural Networks: These models are inspired by the human brain and consist of layers of interconnected nodes (neurons). They can learn complex patterns and are widely used in image and speech recognition.

- Deep Learning: This is a type of neural network with many layers. It’s called “deep” because of the depth of the layers. These models are great for tasks like image and speech recognition, natural language processing, and more.

- Reinforcement Learning: This model learns by interacting with its environment, like a video game character learning to navigate a maze. It gets feedback (rewards or penalties) for its actions and adjusts its strategy accordingly.

ChatGPT itself is a neural network, based on Google’s Transformer model, and the GPT part stands for Generative Pre-trained Transformer. The Transformer model was introduced in Google’s Attention is All You Need research paper, and it was a bloody revolution in the field of natural language processing.

For the rest of this “for dummies” guides, we will focus on neural networks, as they are all the rage right now, and they have the potential of being most widely used type of machine learning model.

Neural Networks

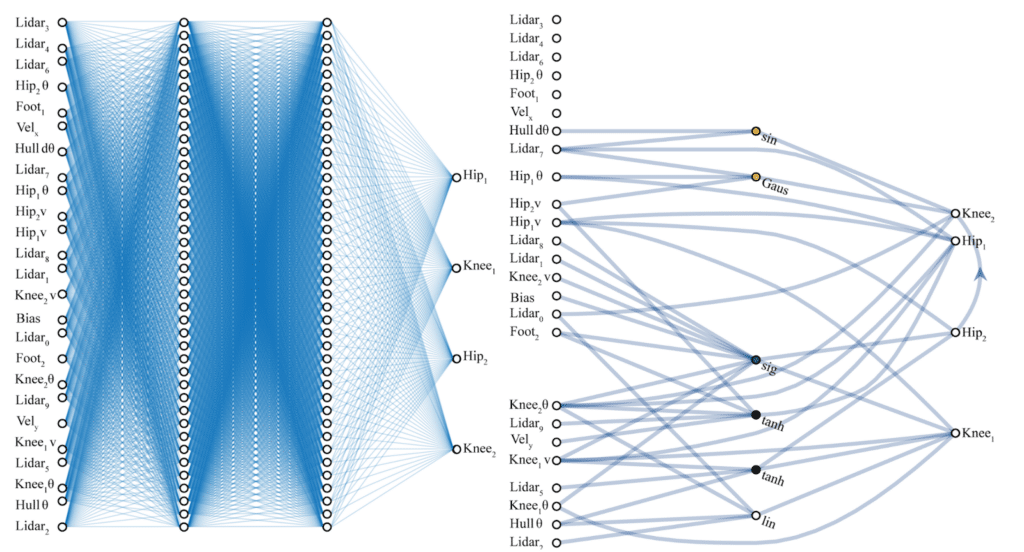

Let’s dive into neural networks. Neural Networks are a type of machine learning model inspired by the human brain, and they’re great at learning complex patterns. Here’s a simple breakdown of how they work, it is fine if you don’t understand everything at this moment:

- Neurons: Neural networks are made up of artificial neurons, which are like little processing units. Each neuron takes input, does some math on it, and then sends the result to other neurons.

- Layers: Neurons are organized into layers. There’s an input layer, an output layer, and one or more hidden layers in between. The input layer receives the data, and the output layer gives us the final result. The hidden layers do the heavy lifting and help the network learn complex patterns.

- Connections: Neurons in one layer are connected to neurons in the next layer. Each connection has a weight, which is a number that determines how much influence one neuron has on another. The network learns by adjusting these weights.

- Activation functions: Each neuron has an activation function, which helps decide whether the neuron should “fire” (send a signal) or not. Common activation functions include the sigmoid, ReLU, and tanh functions. It is important to keep in mind that these functions exist, and the choice of which to use is up to the network designer.

- Training: To train a neural network, we feed it lots of data and adjust the weights of the connections to minimize the difference between the network’s predictions and the actual answers (known as the error). This process is usually done using a technique called backpropagation and an optimization algorithm, like gradient descent.

- Testing: After training, we test the neural network on new, unseen data to see how well it generalizes to new situations.

Neural networks are popular for tasks like image recognition, speech recognition, and natural language processing. They can be simple with just a few neurons or very complex with millions of neurons and many layers.

Admittedly, the most interesting part of a neural network is the training process, and most of the value that we can get from a neural network is influenced by its training.

Training a neural network

Training a neural network involves adjusting its connection weights so that it can learn from the data. Let me break down the process for you:

- Initialize weights: Before training starts, we randomly assign small numbers to the weights of the connections between neurons.

- Feedforward: We input the data into the network and let the signals flow through the layers, from input to output. At each neuron, the input signals are multiplied by the connection weights, summed up, and passed through an activation function. The output layer gives us the network’s predictions.

- Calculate error: We compare the network’s predictions to the actual answers (called targets) and calculate the error (or the difference between them). Commonly used error functions include mean squared error and cross-entropy loss.

- Backpropagation: This is the key step in training a neural network. We start from the output layer and work our way back through the layers, calculating how much each weight contributed to the error. This process involves some calculus, specifically, calculating partial derivatives of the error with respect to the weights.

- Update weights: We use an optimization algorithm, like gradient descent, to adjust the weights based on the calculated errors. The goal is to minimize the error by updating the weights in small steps. We often use a learning rate to control the size of these steps.

- Repeat: We repeat steps 2-5 for many iterations (called epochs) and for all the data samples in our training dataset. The network keeps learning and adjusting its weights until the error becomes as small as possible.

- Validation: During training, we also periodically check the network’s performance on a separate validation dataset. This helps us monitor the progress and avoid overfitting (when the network becomes too good at the training data but performs poorly on new, unseen data).

That’s a simple overview of how neural networks are trained! There’s a lot more to it, but I hope this gives you a good starting point.

Where does the AI training data come form?

The training data for AI models, like GPT-3, usually comes from a variety of sources. The goal is to have a diverse and extensive dataset to help the model learn and understand different topics, languages, and writing styles. Here are some common sources of training data:

- Text from the internet: A large portion of the training data comes from text available on the internet, such as websites, blogs, and articles. This provides a wide range of topics and styles for the model to learn from.

- Books, research papers, and reports: These sources can help the model learn more formal language, domain-specific terminology, and complex concepts.

- Social media posts and comments: Text from social media platforms like Twitter, Facebook, and Reddit can help the model learn informal language, slang, and colloquial expressions.

- Datasets from academic and industry research: Researchers and organizations often release datasets specifically created for AI training. These datasets can include text from various sources and can be focused on specific tasks or domains.

- Publicly available data repositories: There are many repositories of text data available online, like Project Gutenberg and Common Crawl, that can be used as training data.

To ensure the model learns effectively, the training data must be cleaned and preprocessed. This involves removing irrelevant or inappropriate content, fixing typos and formatting issues, and sometimes translating text to a common language.

Keep in mind that AI models like GPT-3 can’t access new information beyond their training data, so their knowledge is limited to the information available at the time of training.

Examples of Neural Networks

Neural networks have been used in a wide range of applications, thanks to their ability to learn complex patterns and relationships. Here are some examples of how they’re being used:

- Image recognition: Convolutional Neural Networks (CNNs) are a popular choice for image recognition tasks, like identifying objects in images, facial recognition, or diagnosing diseases from medical scans. For example, Google Photos uses neural networks to automatically tag and organize your photos based on their content.

- Natural language processing (NLP): Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and the Transformer architecture are commonly used for NLP tasks like machine translation, sentiment analysis, and text generation. For instance, Google Translate uses neural networks to provide more accurate translations between languages.

- Speech recognition: Neural networks, like LSTMs and CNNs, are used to convert spoken language into written text in applications like Siri, Google Assistant, and Alexa.

- Game playing: Deep Reinforcement Learning, which combines deep neural networks with reinforcement learning, has been used to teach AI agents to play games like Go, chess, and various video games. Google’s DeepMind developed AlphaGo, an AI that defeated the world champion Go player.

- Recommender systems: Neural networks can be used to provide personalized recommendations for users on platforms like Netflix, YouTube, and Amazon, by analyzing their preferences and browsing history.

- Autonomous vehicles: Neural networks help self-driving cars understand their environment, identify objects, and make decisions in real-time.

Si quiere puede hacernos una donación por el trabajo que hacemos, lo apreciaremos mucho.

Direcciones de Billetera:

- BTC: 14xsuQRtT3Abek4zgDWZxJXs9VRdwxyPUS

- USDT: TQmV9FyrcpeaZMro3M1yeEHnNjv7xKZDNe

- BNB: 0x2fdb9034507b6d505d351a6f59d877040d0edb0f

- DOGE: D5SZesmFQGYVkE5trYYLF8hNPBgXgYcmrx

También puede seguirnos en nuestras Redes sociales para mantenerse al tanto de los últimos post de la web:

- Telegram

Disclaimer: En Cryptoshitcompra.com no nos hacemos responsables de ninguna inversión de ningún visitante, nosotros simplemente damos información sobre Tokens, juegos NFT y criptomonedas, no recomendamos inversiones