Algorithmic bias can impact various aspects of our lives, from getting online content recommendations to finding jobs and making financial decisions.

Bias is in human nature. Different people have different genders, races, upbringings, educational backgrounds, cultures, beliefs, experiences, and so on.

Thus, their opinions, thoughts, likes and dislikes, and preferences vary from each other. They can develop certain biases towards or against certain categories.

Machines are no different. These also might see people, things, and events differently due to biases introduced in their algorithms. Due to these biases, AI and ML systems can produce unfair results, hampering people in many ways.

In this article, I’ll discuss what algorithmic biases are, their types, and how to detect and reduce them in order to enhance fairness in results.

Let’s begin!

What Are Algorithmic Biases?

An algorithmic bias is the tendency of ML and AI algorithms to reflect human-like biases and generate unfair outputs. The biases could be based on gender, age, race, religion, ethnicity, culture, and so on.

In the context of artificial intelligence and machine learning, algorithmic biases are systematic, repeatable errors introduced in a system that produce unfair outcomes.

Biases in algorithms can arise due to many reasons, like decisions related to how data is collected, chosen, coded, or used in training the algorithm, the intended use, the algorithm’s design, etc.

Example: You can observe algorithmic bias in a search engine result, leading to privacy violations, social biases, etc.

There are many cases of algorithmic biases in areas such as election outcomes, spreading hate speech online, healthcare, criminal justice, recruitment, etc. This is compounding existing biases in gender, race, economy, and society.

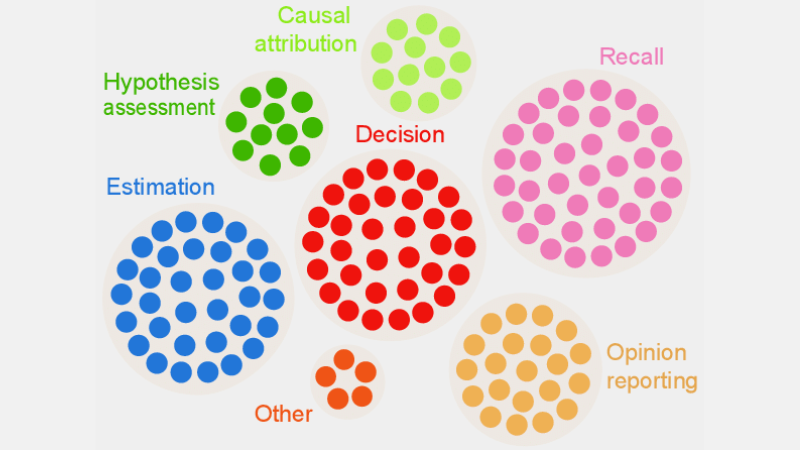

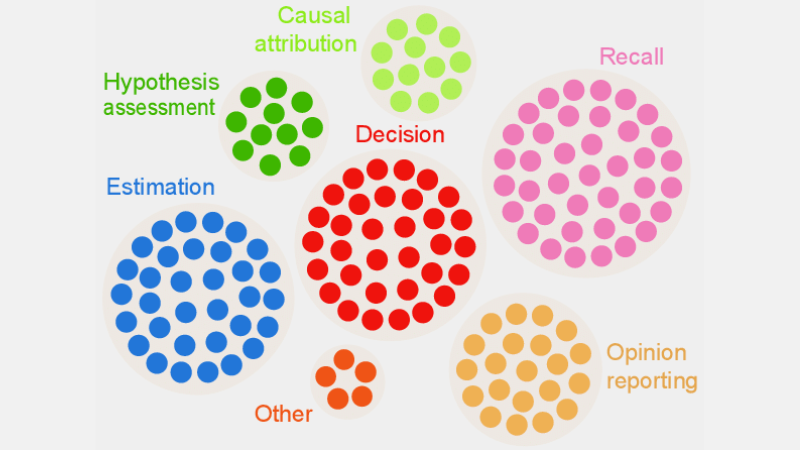

Types of Algorithmic Biases

#1. Data Bias

Data bias happens when the data required for training an AI model does not properly represent real-world scenarios or populations. This could result in unbalanced or skewed datasets.

Example: Suppose a facial recognition software solution is trained primarily on the white population. So, it may perform poorly when tasked to recognize people with darker skin tones, affecting them.

#2. Measurement Bias

This bias can arise due to an error in the measurement or data collection process.

Example: If you train a healthcare diagnostic algorithm to detect a sickness based on some metrics like previous doctor visits, it may cloud judgment and cause bias while overlooking actual symptoms.

#3. Model Bias

Model biases arise while designing the algorithm or the AI model.

Example: Suppose an AI system has an algorithm that’s designed to maximize profits no matter how; it might end up prioritizing financial gains at the cost of business ethics, safety, fairness, etc.

#4. Evaluation Bias

Evaluation bias can occur when the factors or criteria for evaluating an AI system’s performance are biased.

Example: If a performance evaluation AI system uses standard tests favoring a specific category of employees in a company, it may promote inequalities.

#5. Reporting Bias

Reporting bias can occur when the training dataset lacks an accurate reflection of reality in its event frequency.

Example: If an AI security tool doesn’t perform well in a specific category, it may flag the complete category as suspicious.

This means that the dataset on which the system has been trained marked each historical incident related to that category as insecure due to the higher frequency of incidents.

#6. Selection Bias

Selection bias arises when the training data is selected with no proper randomization or doesn’t represent the total population well.

Example: If a facial recognition tool is trained on limited data, it may start showing some discrimination against data that it encounters less, such as identifying women of color in politics than that of males and lighter-skin individuals in politics.

#7. Implicit Bias

Implicit bias arises when the AI algorithm makes assumptions based on certain personal experiences, which might not apply to wider categories or people explicitly.

Example: If a data scientist designing an AI algorithm personally believes that women primarily like pink and not blue or black, the system may recommend products accordingly, which doesn’t apply to every woman. Many love blue or black.

#8. Group Attribution Bias

This bias can happen when the algorithm designers apply things that are meant for certain individuals to a complete group, whether those individuals are part of the group or not. Group attribution bias is common in recruitment and admission tools.

Example: An admission tool may favor candidates from a specific school, discriminating against other students who don’t belong to that school.

#9. Historical Bias

Collecting historical datasets is important when gathering datasets for training an ML algorithm. But if you don’t pay attention, bias can occur in your algorithms due to the biases present in your historical data.

Example: If you train an AI model with 10 years of historical data to shortlist candidates for technical positions, it might favor male applicants if the training data has more men in it than women.

#10. Label Bias

While training ML algorithms, you might need to label a lot of data in order to make it useful. However, the process of labeling data might vary a lot, generating inconsistencies and introducing bias into the AI system.

Example: Suppose you train an AI algorithm by labeling cats in images using boxes. If you don’t pay attention, the algorithm might fail at identifying a cat in a picture if its face is not visible, but can identify the ones with cat faces on them.

This means that the algorithm is biased in identifying pictures with front-facing cats. It can’t identify the cat if the image is taken from a different angle when the body is showing but not the face.

#11. Exclusion Bias

Exclusion bias arises when a specific person, a group of people, or a category is excluded unintentionally or intentionally during data collection if they are thought to be irrelevant. It mostly happens during the data preparation stage of the ML lifecycle at the time of cleaning data and preparing it for use.

Example: Suppose an AI-based prediction system has to determine a specific product’s popularity during the winter season based on its purchase rate. So, if a data scientist notices some purchases in October and removes those records, thinking they are erroneous and taking the standard duration as November to January. But there are places when winter exceeds those months. So, the algorithm will be biased towards the countries receiving winter from November to January.

How Are Biases Introduced into Algorithms?

Training Data

The main source of algorithmic bias is biased data used for training the AI and ML algorithms. If the training data itself has elements of inequalities and prejudices in it, the algorithm will learn those elements and perpetuate biases.

Design

When designing the algorithm, the developer might knowingly or unknowingly introduce the reflections of personal thoughts or preferences in the AI system. So, the AI system will be biased towards certain categories.

Decision-Making

Many times, data scientists and leaders make decisions based on their personal experiences, surroundings, beliefs, and so on. These decisions are also reflected in algorithms, which causes biases.

Lack of Diversity

Due to a lack of diversity in the development team, the team members end up creating algorithms that don’t represent the entire population. They don’t have experience or exposure to other cultures, backgrounds, beliefs, ways, etc., which is why their algorithms can be biased in a certain way.

Data Preprocessing

The method used for cleaning and processing data can introduce algorithmic bias. In addition, if you don’t design these methods carefully to counter bias, it might get severe in the AI model.

Architecture

The model architecture and type of ML algorithm you choose can also introduce bias. Some algorithms attract bias more than others, along with how they are designed.

Feature Selection

The features you chose to train an AI algorithm are one of the causes of bias. If you don’t choose features by taking into account their impacts on the output’s fairness, some bias can arise, favoring some categories.

History and Culture

If an algorithm is fed and trained on data taken from history or certain cultures, it can inherit biases like prejudices, beliefs, norms, etc. These biases can impact AI outcomes even if they are unfair and irrelevant in the present.

Data Drift

Data that you use for training your AI algorithms today might get irrelevant, unuseful, or obsolete in the future. This could be due to the changing technology or society. However, these datasets can still introduce bias and hamper performance.

Feedback Loops

Some AI systems not only can communicate with users but also adapt to their behaviors. This way, the algorithm can boost existing bias. So, when the personal biases of users enter the AI system, it can generate a biased feedback loop.

How to Detect Algorithmic Bias?

Define What’s “Fair”

To detect unfair outcomes or biases in algorithms, you need to define what “fair” exactly implies for the AI system. For this, you can consider factors such as gender, age, race, sexuality, region, culture, etc.

Determine the metrics to calculate fairness, like equal opportunity, predictive parity, impacts, etc. Once you have defined “fairness”, it becomes easier for you to detect what isn’t fair and resolve the situation.

Audit Training Data

Analyze your training data thoroughly to look for imbalances and inconsistencies in representing different categories. You must examine the feature distribution and check whether it represents real-world demographics or not.

For visualizing data, you can create histograms, heatmaps, scatter plots, etc. to highlight disparities and patterns that can’t be revealed with the help of just statistical analysis.

Apart from internal audits, you can involve external experts and auditors to evaluate system bias.

Measure Model Performance

To detect biases, try measuring the performance of your AI model for various demographics and categories. It would help if you divide the training into different groups based on race, gender, etc. You can also use your fairness metrics to calculate the differences in the outcomes.

Use Suitable Algorithms

Choose algorithms that promote fair outcomes and can address bias in training an AI model. Fairness-aware algorithms aim to prevent bias while ensuring equal predictions across various categories.

Bias Detection Software

You can use specialized tools and libraries that are fairness-aware to detect biases. These tools offer fairness metrics, visualizations, statistical tests, and more to detect bias. Some popular ones are AI Fairness 360 and IBM Fairness 360.

Seek User Feedback

Ask users and customers for their feedback on the AI system. Encourage them to give their honest reviews if they have felt any kind of unfair treatment or bias in the AI system. This data will help you figure out issues that might not be flagged in automated tools and other detection procedures.

How to Mitigate Bias in Algorithms

Diversify Your Company

Creating diversity in your company and development team enables faster detection and removal of biases. The reason is biases can be noticed quickly by users who are affected by them.

So, diversify your company not only in demographics but also in skills and expertise. Include people of different genders, identities, races, skin colors, economic backgrounds, etc., as well as people with different educational experiences and backgrounds.

This way, you will get to collect wide-ranging perspectives, experiences, cultural values, likes and dislikes, etc. This will help you enhance the fairness of your AI algorithms, reducing biases.

Promote Transparency

Be transparent with your team about the objective, algorithms, data sources, and decisions regarding an AI system. This will enable users to understand how the AI system works and why it generates certain outputs. This transparency will foster trust.

Fairness Aware Algorithms

Use fairness-aware algorithms when developing the model to ensure fair outcomes are generated for different categories. This becomes evident if you create AI systems for highly regulated industries like finance, healthcare, etc.

Evaluate Model Performance

Test your models to examine the AI’s performance across various groups and subgroups. It will help you understand issues that aren’t visible in the aggregate metrics. You can also simulate different scenarios to check their performance in those scenarios, including complex ones.

Follow Ethical Guidelines

Formulate some ethical guidelines for developing AI systems, respecting fairness, privacy, safety, and human rights. You must enforce those guidelines across your organization so that fairness increases organization-wide and is reflected in the AI system’s outputs.

Set Controls and Responsibilities

Set clear responsibilities for everyone in the team working on the design, development, maintenance, and deployment of the AI system. You must also set proper controls with strict protocols and frameworks to address biases, errors, and other concerns.

Apart from the above, you must carry on regular audits to reduce biases and strive for continuous improvements. Also, stay updated with recent changes in technology, demographics, and other factors.

Real-World Examples of Algorithmic Biases

#1. Amazon’s Algorithm

Amazon is a leader in the e-commerce industry. However, its recruitment tool that utilized AI for evaluating job applicants according to their qualifications showed gender bias. This AI system was trained using resumes of previous candidates in the technical roles.

Unfortunately, the data had a greater number of male applicants, which the AI learned. So, it unintentionally favored male applicants in technical roles over females who were underrepresented. Amazon had to discontinue the tool in 2017 despite making efforts to reduce the bias.

#2. Racist US Healthcare Algorithms

An algorithm that US-based hospitals used for forecasting patients needing additional care was heavily biased towards white patients. The system evaluated the medical needs of the patients based on their expense history on healthcare, correlating cost with medical needs.

The system’s algorithm didn’t consider how white and black patients paid for their healthcare needs. Despite uncontrolled illness, Black patients paid mostly for emergencies. Thus, they were categorized as healthier patients and didn’t qualify for additional care as compared to white patients.

#3. Google’s Discriminating Algorithm

Google’s online ad system was found discriminating. It showed high-paying positions like CEOs to males significantly greater than women. Even if 27% of US CEOs are females, the representation for them is much smaller in Google, around 11%.

The algorithm could have shown the output by learning user behavior, like people viewing and clicking on ads for high-paying roles are men; the AI algorithm will show those ads to men more than women.

Conclusion

Algorithmic bias in ML and AI systems can lead to unfair results. These results can impact individuals in various fields, from healthcare, cybersecurity, and e-commerce to elections, employment, and more. It can lead to discrimination on the basis of gender, race, demographics, sexual orientation, and other aspects.

Hence, it’s important to reduce biases in AI and ML algorithms to promote fairness in results. The above information will help you detect biases and reduce them so you can create fair AI systems for users.

You may also read about AI Governance.

Si quiere puede hacernos una donación por el trabajo que hacemos, lo apreciaremos mucho.

Direcciones de Billetera:

- BTC: 14xsuQRtT3Abek4zgDWZxJXs9VRdwxyPUS

- USDT: TQmV9FyrcpeaZMro3M1yeEHnNjv7xKZDNe

- BNB: 0x2fdb9034507b6d505d351a6f59d877040d0edb0f

- DOGE: D5SZesmFQGYVkE5trYYLF8hNPBgXgYcmrx

También puede seguirnos en nuestras Redes sociales para mantenerse al tanto de los últimos post de la web:

- Telegram

Disclaimer: En Cryptoshitcompra.com no nos hacemos responsables de ninguna inversión de ningún visitante, nosotros simplemente damos información sobre Tokens, juegos NFT y criptomonedas, no recomendamos inversiones