Wondering how to get reliable and consistent data for data analytics? Implement these data-cleansing strategies now!

Your business decision relies on data analytics insights. Similarly, the insights derived from input datasets rely on the quality of the source data. Low-quality, inaccurate, garbage, and inconsistent data source are the tough challenges for the data science and data analytics industry.

Hence, experts have come up with workarounds. This workaround is data cleansing. It saves you from making data-driven decisions that will cause harm to the business instead of improving it.

Read on to learn the best data cleansing strategies successful data scientists and analysts use. Also, explore tools that can offer clean data for instant data science projects.

What is Data Cleansing?

Data quality has five dimensions. Identifying and correcting errors in your input data by following the data quality policies is known as data cleansing.

The quality parameters of this five-dimension standard are:

#1. Completeness

This quality control parameter ensures that the input data has all the required parameters, headers, rows, columns, tables, etc., for a data science project.

#2. Accuracy

A data quality indicator that says the data is close to the true value of the input data. Data can be of true value when you follow all the statistical standards for surveys or scrapping for data collection.

#3. Validity

This parameter data science that the data complies with the business rules that you have set up.

#4. Uniformity

Uniformity confirms whether the data contains uniform content or not. For example, energy consumption survey data in the US should contain all the units as the imperial measurement system. If you use the metric system for certain content in the same survey, then the data is not uniform.

#5. Consistency

Consistency ensures that the data values are consistent between tables, data models, and datasets. You also need to monitor this parameter closely when moving data across systems.

In a nutshell, apply the above quality control processes to raw datasets and cleanse data before feeding it to a business intelligence tool.

Importance of Data Cleansing

Just like that, you can not run your digital business on a poor internet bandwidth plan; you can not make great decisions when the data quality is unacceptable. If you try to use garbage and erroneous data to make business decisions, you will see a loss of revenue or poor return on investment (ROI).

According to a Gartner report on poor data quality and its consequences, the think tank has found that the average loss a business face is $12.9 million. This is just for making decisions relying on erroneous, falsified, and garbage data.

The same report suggests that using bad data across the US costs the country a staggering yearly loss of $3 trillion.

The final insight will surely be garbage if you feed the BI system with garbage data.

Therefore, you must cleanse the raw data to avoid monetary losses and make effective business decisions from data analytics projects.

Benefits of Data Cleansing

#1. Avoid Monetary Losses

By cleansing the input data, you can save your company from monetary losses that could come as a penalty for noncompliance or loss of customers.

#2. Make Great Decisions

High-quality and actionable data delivers great insights. Such insights help you to make outstanding business decisions about product marketing, sales, inventory management, pricing, etc.

#3. Gain an Edge Over the Competitor

If you opt for data cleansing earlier than your competitors, you will enjoy the benefits of becoming a fast mover in your industry.

#4. Make the Project Efficient

A streamlined data cleansing process increases the confidence level of the team members. Since they know the data is reliable, they can focus more on data analytics.

#5. Save Resources

Cleansing and trimming data reduces the size of the overall database. Hence, you clear out the database storage space by eliminating garbage data.

Strategies to Cleanse Data

Standardize the Visual Data

A dataset will contain numerous types of characters like texts, digits, symbols, etc. You need to apply a uniform text capitalization format to all the texts. Ensure symbols are in the right encoding, like Unicode, ASCII, etc.

For example, capitalized term Bill means the name of a person. Contrarily, a bill or the bill means a receipt of a transaction; hence, appropriate capitalization formatting is crucial.

Remove Replicated Data

Duplicated data confuses the BI system. Consequently, the pattern will become skewed. Hence, you need to weed out duplicate entries from the input database.

Duplicates usually come from human data entry processes. If you can automate the raw data entry process, you can eradicate data replications from the root.

Fix Unwanted Outliers

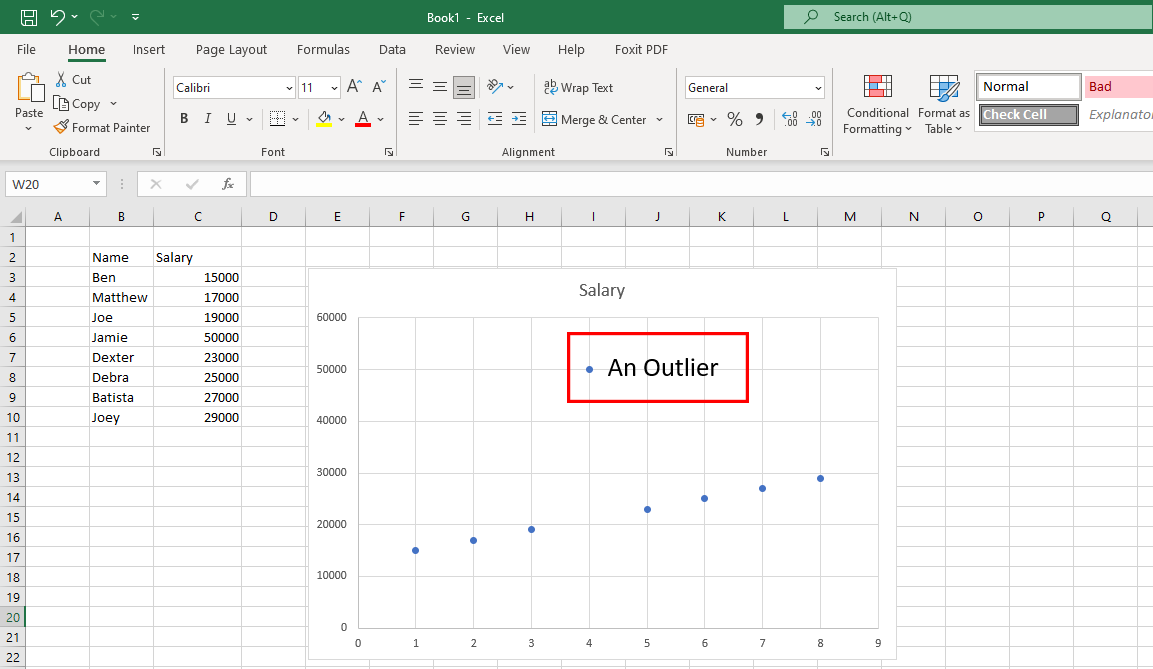

Outliers are unusual data points that do not sit within the data pattern, as shown in the above graph. Genuine outliers are okay since they help the data scientists discover survey flaws. However, if outliers come from human errors, then it is a problem.

You must put the datasets in charts or graphs to look for outliers. If you find any, investigate the source. If the source is a human error, remove the outlier data.

Focus on Structural Data

It is mostly finding and fixing errors in the datasets.

For instance, a dataset contains one column of USD and many columns of other currencies. If your data is for the US audience, convert other currencies to equivalent USD. Then, replace all other currencies in USD.

Scan Your Data

A huge database downloaded from a data warehouse can contain thousands of tables. You may not need all the tables for your data science project.

Hence, after getting the database, you must write a script to pinpoint the data tables you need. Once you know this, you can delete irrelevant tables and minimize the size of the dataset.

This will ultimately result in faster data pattern discovery.

Cleanse Data on the Cloud

If your database uses the schema-on-write approach, you need to convert it to schema-on-read. This will enable data cleansing directly on the cloud storage and extraction of formatted, organized, and ready-to-analyze data.

Translate Foreign Languages

If you run a survey worldwide, you can expect foreign languages in the raw data. You must translate rows and columns containing foreign languages to English or any other language you prefer. You can use computer-assisted translation (CAT) tools for this purpose.

Step-by-Step Data Cleansing

#1. Locate Critical Data Fields

A data warehouse contains terabytes of databases. Each database can contain a few to thousands of columns of data. Now, you need to look at the project objective and extract data from such databases accordingly.

If your project studies eCommerce shopping trends of US residents, collecting data on offline retail shops in the same workbook will not do any good.

#2. Organize Data

Once you have located the important data fields, column headers, tables, etc., from a database, collate them in an organized way.

#3. Wipe Out Duplicates

Raw data collected from data warehouses will always contain duplicate entries. You need to locate and delete those replicas.

#4. Eliminate Empty Values and Spaces

Some column headers and their corresponding data field may contain no values. You need to eliminate those column headers/fields or replace blank values with the right alphanumeric ones.

#5. Perform Fine Formatting

Datasets may contain unnecessary spaces, symbols, characters, etc. You need to format these using formulas so that the overall dataset looks uniform in cell size and span.

#6. Standardize the Process

You need to create an SOP that the data science team members can follow and do their duty during the data cleansing process. It must include the followings:

- Frequency of raw data collection

- Raw data storage and maintenance supervisor

- Cleansing frequency

- Clean data storage and maintenance supervisor

Here are some popular data cleansing tools that can help you in your data science projects:

WinPure

If you are looking for an application that lets you clean and scrubs the data accurately and quickly, WinPure is a reliable solution. This industry-leading tool offers an enterprise-level data cleansing facility with unmatched speed and precision.

As it is designed to serve individual users and businesses, anyone can use it without difficulty. The software uses the Advanced Data Profiling feature to analyze types, formats, integrity, and value of data for quality checking. Its powerful and intelligent data-matching engine chooses perfect matches with minimum false matches.

Apart from the above features, WinPure also offers stunning visuals for all data, group matches, and non-matches.

It also functions as a merging tool that joins duplicate records to generate a master record that can keep all current values. Moreover, you can use this tool to define rules for master record selection and remove all records instantly.

OpenRefine

OpenRefine is a free and open-source tool that helps you transform your messy data into a clean format that can be used for web services. It uses facets to clean large datasets and operates on filtered dataset views.

With the help of powerful heuristics, the tool can merge similar values to get rid of all inconsistencies. It offers reconciliation services so users can match their datasets with external databases. In addition, using this tool means you can return to the older dataset version if necessary.

Also, users can replay operation history on an updated version. If you are worried about data security, OpenRefine is the right option for you. It cleans your data on your machine, so there is no data migration to the cloud for this purpose.

Trifacta Designer Cloud

While data cleansing can be complex, Trifacta Designer Cloud makes it easier for you. It uses a novel data preparation approach for data scrubbing so that organizations can get the most value out of it.

Its user-friendly interface enables non-technical users to clean and scrub data for sophisticated analysis. Now, businesses can do more with their data by leveraging the ML-powered intelligent suggestions of Trifacta Designer Cloud.

What’s more, they will need to invest less time in this process while having to deal with less number of mistakes. It requires you to use reduced resources to get more out of the analysis.

Cloudingo

Are you a Salesforce user worried about the quality of the collected data? Use Cloudingo to clean up customer data and only have the necessary data. This application makes managing customer data easy with features like deduplication, import, and migration.

Here, you can control record merging with customizable filters and rules and standardize data. Delete useless and inactive data, update missing data points, and ensure accuracy in US mailing addresses.

Also, businesses can schedule Cloudingo to deduplicate data automatically so you can always have access to clean data. Keeping the data synced with Salesforce is another crucial feature of this tool. With it, you can even compare Salesforce data with information stored in a spreadsheet.

ZoomInfo

ZoomInfo is a data-cleansing solution provider that contributes to the productivity and effectiveness of your team. Businesses can experience more profitability as this software delivers duplication-free data to company CRM and MATs.

It uncomplicates data quality management by removing all the costly duplicate data. Users can also secure their CRM and MAT perimeter using ZoomInfo. It can cleanse data within minutes with automated deduplication, matching, and normalization.

Users of this application can enjoy flexibility and control over matching criteria and merged results. It helps you build a cost-effective data storage system by standardizing any type of data.

Final Words

You should be concerned about the quality of the input data in your data science projects. It is the basic feed for big projects like machine learning (ML), neural networks for AI-based automation, etc. If the feed is faulty, think about what would be the result of such projects.

Hence, your organization needs to adopt a proven data cleansing strategy and implement that as a standard operating procedure (SOP). Consequently, the quality of input data will also improve.

If you are busy enough with projects, marketing, and sales, it is better to leave the data-cleansing part to the experts. The expert could be any of the above data cleansing tools.

You may also be interested in a service blueprint diagram to implement data cleansing strategies effortlessly.

Si quiere puede hacernos una donación por el trabajo que hacemos, lo apreciaremos mucho.

Direcciones de Billetera:

- BTC: 14xsuQRtT3Abek4zgDWZxJXs9VRdwxyPUS

- USDT: TQmV9FyrcpeaZMro3M1yeEHnNjv7xKZDNe

- BNB: 0x2fdb9034507b6d505d351a6f59d877040d0edb0f

- DOGE: D5SZesmFQGYVkE5trYYLF8hNPBgXgYcmrx

También puede seguirnos en nuestras Redes sociales para mantenerse al tanto de los últimos post de la web:

- Telegram

Disclaimer: En Cryptoshitcompra.com no nos hacemos responsables de ninguna inversión de ningún visitante, nosotros simplemente damos información sobre Tokens, juegos NFT y criptomonedas, no recomendamos inversiones