Sora AI is a text-to-video model developed by OpenAI—the minds behind ChatGPT. Sora AI is still in development, so learning how to access Sora will have to wait — for now. Despite this, looking into how it performs compared to other text-to-video generators is important for navigating the future of AI content creation.

OpenAI’s example videos demonstrate Sora AI’s proficiency in producing photo-realistic visuals. Furthermore, it doesn’t seem to have any issues creating visually pleasing cartoon-like animation. The character movements, lighting, proportions, and minute details (such as facial blemishes and fur texture) are fairly precise. As such, Sora AI is shaping up to become the ideal text-to-video AI model.

The best text-to-video AI generators available as of now are easy to use and create high-quality content—albeit with notable limitations. So far, even the most used generators can only accurately produce human faces, torsos, and voices. OpenAI seems to be innovating unique features that stand out amongst the competition.

Developing newer, more complex, and increasingly impressive AI models has become an arms race. While AI programmers are working hard to invent novel and useful tools, there’s also a need to implement safety protocols.

Preventing powerful models like Sora from being used to create inappropriate animation (hateful, copyright infringing, and other negative possibilities) is vital. Not only for our safety, but to enforce rational best practices in the industry—which OpenAI is taking seriously.

How to Access Sora AI

There’s currently no public access to Sora AI. Only a few individuals can use this text-to-video model. Besides “red teamers” researching how to make usage as safe as possible, OpenAI has provided access to a few artists, designers, and filmmakers for testing purposes.

In a recent FAQ article from OpenAI about accessing Sora, the company stated that they have no timeline regarding public availability. Moreover, OpenAI is not sharing details about who outside their offices are involved. Vaguely, they did mention engagement with policymakers, educators, and more on getting feedback surrounding responsible development and usage of Sora.

Can You Become a Sora AI Tester?

So far, there’s no official way to become a tester for Sora—or a member of OpenAI’s “red teamers.” There have been many threads about such questions on OpenAI’s community forum. However, moderators quickly remind users there’s no official way to sign up for Sora testing. Following such reminders, threads are closed to avoid confusing other users.

OpenAI is determined to make Sora as safe as possible before letting the general public get their hands on this complex AI model. The core reason behind the lack of details is also security. Giving out information about testers is unsafe, as they could be targeted.

As such, it may be a while before the public gets access to Sora. OpenAI is hyping the quality of its in-development AI model, so a general release may not be too far into the future. Most companies tend to market their services and products well before launch—but not usually years in advance. Therefore, a reasonable speculation for Sora AI’s public release would be later in 2024 or 2025.

Examples of How Sora AI Works

Sora boasts industry-defining animation in many areas (lighting, minute details, and more), but it has difficulties with the usual AI pitfalls.

What Sora AI Can’t Do Yet

There are some issues interpreting concepts and directions. For example, when told to generate footage of a “Hermit crab using an incandescent lightbulb as its shell,” Sora produces visuals of a crab-like creature with a generic shell on its back that possesses a lightbulb on its rear end.

In this beachgoing length of animation, it’s clear that the character is only vaguely crab-like. Yes, it has legs and claws, but no living species of crab (or crab-like animals) have a shell like the one depicted in Sora’s imagery. Moreover, the lightbulb is not being used as a shell, it’s merely attached to the back of the character’s frame.

Another example can be found on OpenAI’s dedicated webpage about Sora. In one of the five videos outlining Sora’s current weaknesses, a prominent one depicts a man running on a treadmill in reverse. Criteria like directions—up, down, left, and right—are currently challenging for Sora to analyze. OpenAI has been transparent and honest about where their in-development text-to-video model is lacking, which seems like a sign that they know where and how to improve.

Overall, there are 5 (known) major issues with Sora AI’s graphics:

- Directional interpretation

- Characters and objects appear and disappear

- Objects and characters move through one another

- Defining when objects are meant to be rigid or soft

- Determining the outcomes of physical interactions between characters and objects

Keep in mind that Sora AI still has a long way to go before it’s ready for the public. As such, its current failings are bound to be addressed and corrected—at least to some degree.

What Sora AI Does Well

While OpenAI’s upcoming text-to-video generator can’t handle all aspects of intimate character and object interactions, the visuals are nearly flawless when the focus is on the environment.

Sweeping camera shots depicting coastal scenery and snowy birds-eye views of Tokyo are beautifully realistic. Equally, faux wildlife footage looks convincing.

What’s more, Sora is adept at combining organic and inorganic components when the instructions are vague. Unlike complex and unique prompts like “Give a hermit crab a lightbulb for a shell,” simpler ones with more freedom such as “Cybernetic German Shepherd” yield more appealing results.

Remember that AI models need to learn through ingesting data. There are many more visual examples of dogs with prosthetics than images of hermit crabs with glass objects on their backs.

On a similar note, Sora AI has access to a wealth of nature footage to produce documentary-style animation almost indistinguishable from real life. Generating visuals like a butterfly resting on a flower is consistent and elegant.

For inverse reasons, Sora can create impressively aesthetic content in completely fictional settings. When there is less real-world physics to consider, text-to-video AI models often logically fill in the gaps in prompt information. Therefore, simulations representing science fiction concepts like drones racing on the planet Mars look great—albeit not realistic (by nature of the prompt).

Coming up with a prompt for more cartoon-like animation benefits from this freedom. If the wording of a prompt is fine-tuned, Sora can turn a paragraph’s worth of input text into a stretch of animation on par with the likes of Pixar and other big-name animation studios. The outcome could be the adorable frolicking of a spherical squirrel—or anything else the user desires.

The Weird World of Text-to-Video Generators

In addition to those flocking toward AI video generators for design and filmmaking goals, some wish to access Sora for fun. By pushing the limits of prompt details, users can end up with some hilarious—and bizarre—content. From surrealistic bicycle races across oceans to merging with a blanket on a bed, some outputs are enjoyed more for their comedic value than for artistic purposes.

On one hand, the fantastical aspects are enticing for creative types looking for amusement. While, on the other hand, such visuals are sure to make the development of films easier. For instance, if a director wants to shoot a scene set in an alternate reality where animals eat jewelry, there would be tons of animal rights issues—to say the least. However, with access to Sora AI, such scenes can be generated on a computer with no risk whatsoever.

These days, the film industry is much better than it used to be at treating non-humans with compassion on set. If it wasn’t, surely this Golden Retriever podcast would have something to say about it! In all seriousness, text-to-video generators will revolutionize how movies are made.

A Look Into Sora AI’s Technical Processes

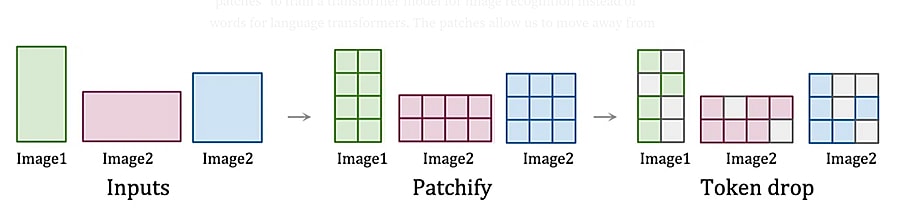

To understand how Sora can create stunning vistas and perform magic tricks with a spoon, look at OpenAI’s technical report. The process starts with compressing image data into spacetime patches that act as transformer tokens. Using layman’s terms: spacetime patches (in this case) are small sections of visual information that have time markers. Transformer tokens are the tiniest tier of data units used in AI training—each patch is a token.

Breaking the process down further:

- Image inputs are identified

- The image inputs are dissected into patches

- Random patches (tokens) are analyzed until all have been processed

The process of patching and then turning visual data into tokens lets AI models learn efficiently. This lets Sora determine how elements are related, when actions are supposed to happen, as well as a great deal more. In addition, this lets Sora consume visual data from multiple resolutions and aspect ratios. Specifically, the ingestion of images and videos in their native formats trains AI models how to gauge various visual aspects more accurately through the comparison of differences between each token.

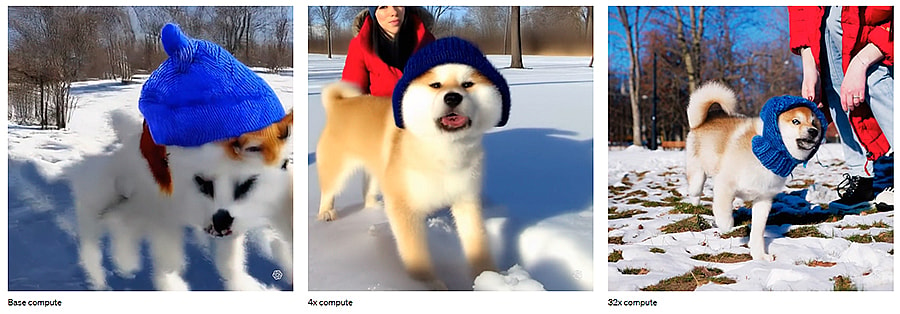

With that said, the process doesn’t end here! After the tokens are gobbled up, complex computing runs multiple times per content generation sequence. In the beginning (at base compute), the video created looks abstract. However, as training compute increases, Sora becomes more and more capable of producing visuals analogous to real life.

Author’s Opinion

What’s been seen of Sora so far blows the competition out of the water. Existing text-to-video generators are only viable for inserting a human host into content. Nothing currently on the market can create complex, stunning, and (mostly) realistic videos from only text prompts.

To balance the scales: Sora AI has a long way to go before the public should be allowed to access it. With that said, it shows incredible promise for many artistic and scientific purposes. Not only can it be a fantastic tool in filmmaking, but it may one day allow scientists to simulate previously impossible scenarios. Yes, Sora’s concept of physics needs to improve a lot before it can be used for the latter reason; however, it—and other AI models like it—will likely get there in a few years.

For instance, imagine a world where the brightest minds can use text-to-video generators to simulate the outcomes of various accidents. Doing so can help researchers create safety suits of unparalleled quality at a fraction of their previous budget. In turn, this would cut down on injuries, medical bills, and hospital operation costs—all of which would benefit society.

However, that’s a way off yet. While leaders in the industry are working on deepening the understanding of Sora and similar models, there are plenty of wacky AI videos to enjoy. Until then, sit back, relax, and plan for the future.

FAQs

Currently, no, Sora AI is not open to the public. There is no release timeline, either.

There is no data regarding pricing for Sora AI. The model is still in development and is undergoing testing.

Si quiere puede hacernos una donación por el trabajo que hacemos, lo apreciaremos mucho.

Direcciones de Billetera:

- BTC: 14xsuQRtT3Abek4zgDWZxJXs9VRdwxyPUS

- USDT: TQmV9FyrcpeaZMro3M1yeEHnNjv7xKZDNe

- BNB: 0x2fdb9034507b6d505d351a6f59d877040d0edb0f

- DOGE: D5SZesmFQGYVkE5trYYLF8hNPBgXgYcmrx

También puede seguirnos en nuestras Redes sociales para mantenerse al tanto de los últimos post de la web:

- Telegram

Disclaimer: En Cryptoshitcompra.com no nos hacemos responsables de ninguna inversión de ningún visitante, nosotros simplemente damos información sobre Tokens, juegos NFT y criptomonedas, no recomendamos inversiones